LLMs as Answer Engines & SEO: There's Only So Many 'Right' Answers

You wouldn't tell your friend to go to Albany first if they were driving from Hartford to Boston and other observations about answer engines and SEO.

Earlier this year I set out to try to understand what, if any, patterns there were to how and why answer engines, AI tools like ChatGPT, Claude, Perplexity and others used as replacements for traditional search engines, cite the sources that they do in a given output to an entry (“prompt”). The condensed version of that research I published with an agency I work with, Session Interactive, on their blog. You can read the full post here: https://sessioninteractive.com/blog/getting-cited-and-seen-in-ai-search-results/ .

In summary, for the experiment, results and considerations were as follows:

The Experiment

I took 2, small businesses in different industries with varying quantities of pages and content ranking first page in Google and Bing.

I devised 20 different prompts/queries with different ranges of detail, length, specificity, formatting and framing.

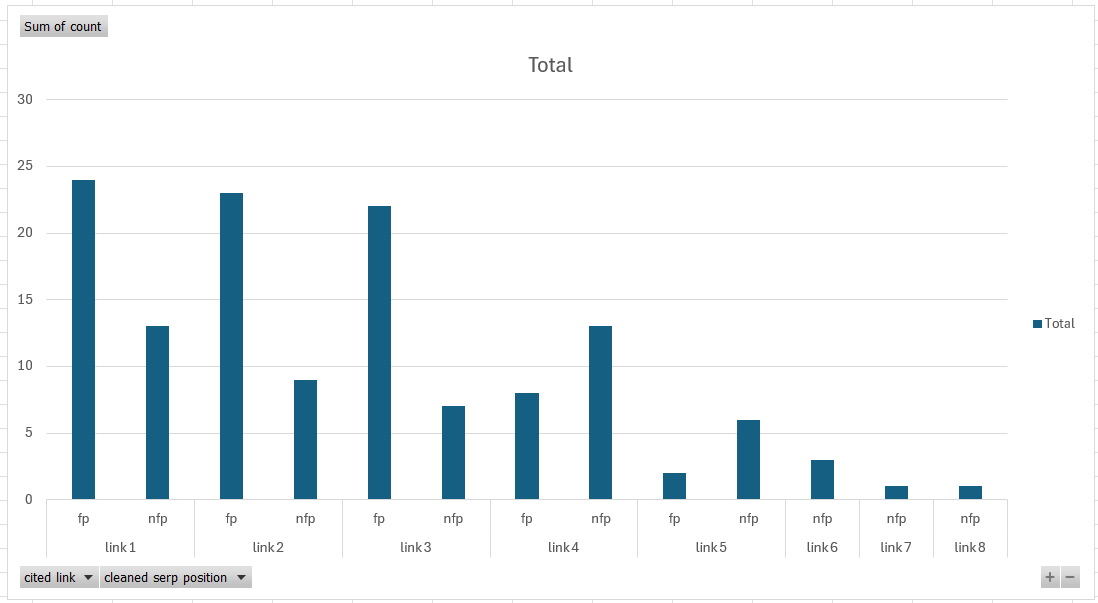

I entered each prompt into ChatGPT and made note of the count of citations, the cited links and their order from 1 to 1+. We did the same prompt, now query, into Bing search and attempted to find the same cited page in Bing SERPs

To simplify the SERP findings, whether the exact link was found on the front page was denoted with ‘fp’ and if the link cited in ChatGPT wasn’t found on the first page of Bing SERPs was denoted with ‘nfp’, or not on the front page.

First page includes if the link appeared as a blue link, map results, rich snippets, expert windows, sidebar Discovery/short answer or other rich media result on the first page.

The Results

My results were that I found just under 60% of the time the number one, first cited link in the ChatGPT query was also on the first page of Bing SERPs. What’s particularly interesting is that among those first citations, link 1, about half weren’t on the front page and that from link 4 onwards, more of the links cited were not on the front page.

The second most frequent bucket that cited links would fall into was the ‘not found’ bucket.

I also observed that when multiple citations were included in a ChatGPT output ‘not found’ links made up the next most populated bucket for other links. In other words, it appeared ChatGPT would simply find other links to cite on a given prompt.

For pages that were known to be ranking on the front page of Bing for a specific term, a prompt including that specific keyword framed in different ways, as expected, were first or second citation and the brand was mentioned.

Considerations

It was impossible to get identical SERPs prompt to prompt. Bing would provide different smatterings of links and rich media each time that.

There were a handful of times where another link from a brand would appear on the front page of Bing but not the exact link originally cited in the GPT prompt. In those cases, those links were marked nfp.

This is a small sample set - very small - with one engine. Time and availability of businesses where I have access to Google and Bing Search Consoles to check my work further. Further testing on other sites in other engines would help validate or invalidate these findings.

The following sections below were my attempt to get more into the why behind these results. These parts were edited out of the published post above.

The TLDR is that, to me, there are only so many ‘right’ or ‘acceptable’ answers to any given prompt no matter how you word it or phrase it. Therefore, these tools will pull, first, whatever is front page of the search engine the LLM predominantly pulls from, the deemed algorithmically ‘correct’ answer, and then pull some other source somewhere else because it is, essentially, the same answer to the question with some differences in details and presentation.

Why Are These the Results?

To answer the first question, how do I get cited by an AE?, the answer seems to steer towards having something on the front page of Bing. To get to the front page, so far, doesn’t involve new methods or tactics that SEOs have applied for years to get content to rank first.

To answer the second question, how does the citation feature work, partly seems like it can be answered by simply having front page content.

The other part is more complicated and prompts a third question: why would ChatGPT cite other things at all for all of our prompts? Why include anything else other than things on the front page, the things that Bing and Google algorithmically decided were the ‘best answers’ by their ranking criteria?

One conclusion I came up with is that most questions have parity answers online so AEs just pick something else to add.

Consider this idea. Imagine someone asks you how do you drive from Boston, Massachusetts to Hartford, Connecticut by car. You, being someone from New England, might propose 3 commonly used routes, the most common of which being I-90 to I-84. You might also say 90 to 291 to 91. You might even say 93, to 95 to 495 to 290 to 90 to 84. The latter 2 options are progressively more time consuming and challenging, but you’ll get to Hartford from Boston in between 90 and 120 minutes. Let’s think of these 3 commonly held ways of traveling from Boston to Hartford by car as links to articles on the front page of Google in positions 1,2 and 3.

You could, also, go all the way west to Albany from Boston, then double back through Massachusetts and then go south to Hartford. You could also say go from Boston to Los Angeles, come back across the Great Lakes, through New York City and then to Hartford. You could also say go up to Maine and then down to Connecticut. Let’s think of these 3 solutions as article links on pages 8, 9 and 10 of Google - links not on the front page.

All 6 of these are solutions to the question of how to get from Boston to Hartford. Realistically, anyone who is from the area and didn’t take joy in sending people into New England traffic purgatory, would choose to advise 90 to 84 first and foremost. Including all 6 ways - links - in an answer is fine to the receiver insofar as including the latter 3 ideas are just additional context and perspective. They’re absurd - but they’re not wrong. You could also simply not include them and get the point across just the same.

In other words, these are all ways to get to Hartford. 3 are most commonly accepted and the latter 3 aren’t. 6 solutions total. There doesn’t need to be 5 million search results to answer this question but there are and they are all, in effect, some where on the spectrum between the first 3 suggestions and the latter 3 suggestions. If you’re an answer engine returning a result and you have a front page of answers to choose to cite and then literally everything else online, most of which is saying the same stuff anywhere from exactly the same or not at all, then of course you’d start with what Google or Bing deems correct by way of their algorithm and then just pick something else.

Are Answer Engines & Using AI for Searches a Threat to Google, SEO and Advertising? Is OpenAI Going to Overtake Google?

In response to ChatGPT, Google, Bing and other engines have introduced AI Overview-style appendages to the top of search result pages. In doing so, Google and Bing concocted and unleashed to users a similar, instant plain-speak answer output in response to a search query complete, now, with citations. Adding these overviews to each company’s respective search engine makes sense and was inevitable for several reasons. First, to keep up with the trend OpenAI started. Second, one could argue is to the help Google reclaim some lost market share. Third, an AI overview attempts remedy some of the longstanding issues users have had with Google since around 2021 including but not limited to excessive advertisements, relevancy issues, duplicate/low quality/excessively lengthy content, SEO-ified content, and affiliate link-riddled content and/or to push publishers to pay for their traffic to boost the company’s flattening earnings.

Up until this point, you, the reader, might think that the answer to the question, is Google at risk of crumbling to OpenAI and suffering a fate similar to Yahoo and AOL, is yes. However, a recent study from Sparktoro found that it’s far from a definitive yes. The study covers many points but chief among them is that by introducing AE/AI Overview qualities to Google search results, user sentiment towards Google has actually improved. It makes sense why. The AI Overview remedies most of the issues outlined above and returns value to users searching on Google. The exchange for these remedies, however, is at the cost of publisher and businesses that benefit from free organic traffic and is expected to exacerbate zero click traffic situation that has slowly rising in the background for the last few years without any recourse that can provide the same consistent volume of free traffic.

It is not an outrageous claim to say that AI/LLM/AE has become a commodified product 3 years since OpenAI introduce ChatGPT. Most AI tools draw from the same publicly available data sets and are supplemented with proprietary ones rewarded through partnerships. Google and Reddit is a noteworthy one. Moreover, most if not all commercial AI tools are skins over OpenAI or another publicly available AI API. ChatGPT loses its moat and significant reason for jaded Google users to jump ship: you can get ChatGPT style features natively in Google and Bing.

At time of writing, it would seem that a migration away from Google is less likely to happen. Instead, what is more likely to happen is simply another way people use the internet, some of which will happen in this latest iteration of Google and what finding information online means.

Last Thoughts

At time of writing this, I don’t believe there is an ‘angle’ to answer engines. Nothing from an expert or the major providers themselves lead me, yet, to think that there’s openings that can be taken advantage of the same way brands took advantage of search algorithms for the last 20 years. I think we, marketing types and those adjacent to marketing types, are going to see experts emerge with new ‘solutions’ that sound promising but will quickly reveal themselves to be nonsense. Brand mentions and writing AE-first content are among the newest terms in circulation to take with a grain of salt.

Everything changes and everything seems to find a way to thrive. I’m sure the industry and the media spoke about AOL and Yahoo the same way we’re talking about OpenAI today and Google of 10 years ago. Everything is cyclical and everything changes.